Summary

concept

- Researched at TIER IV, Inc.

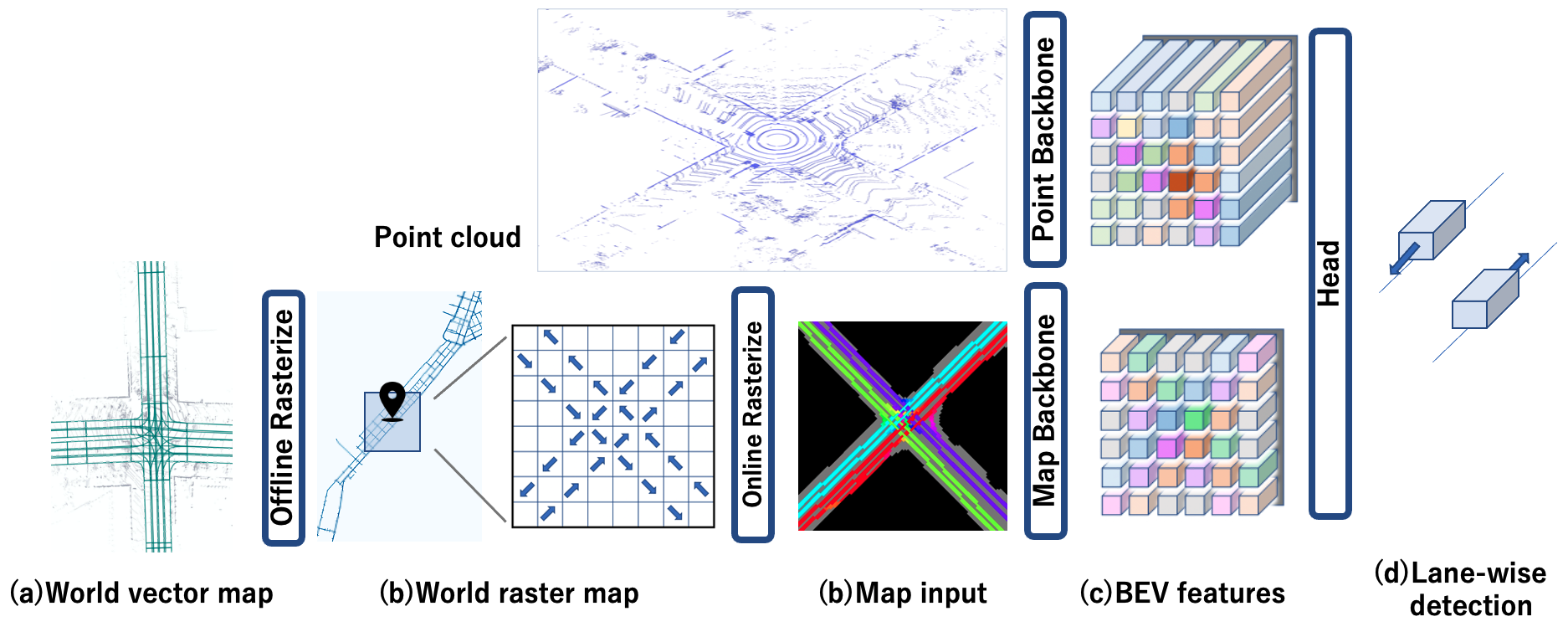

In this study, LaneFusion, a 3D object detection framework employing LiDAR and HD map fusion using a vector map, are developed to overcome the problem that existing detection model often infer objects heading in opposite.

Method

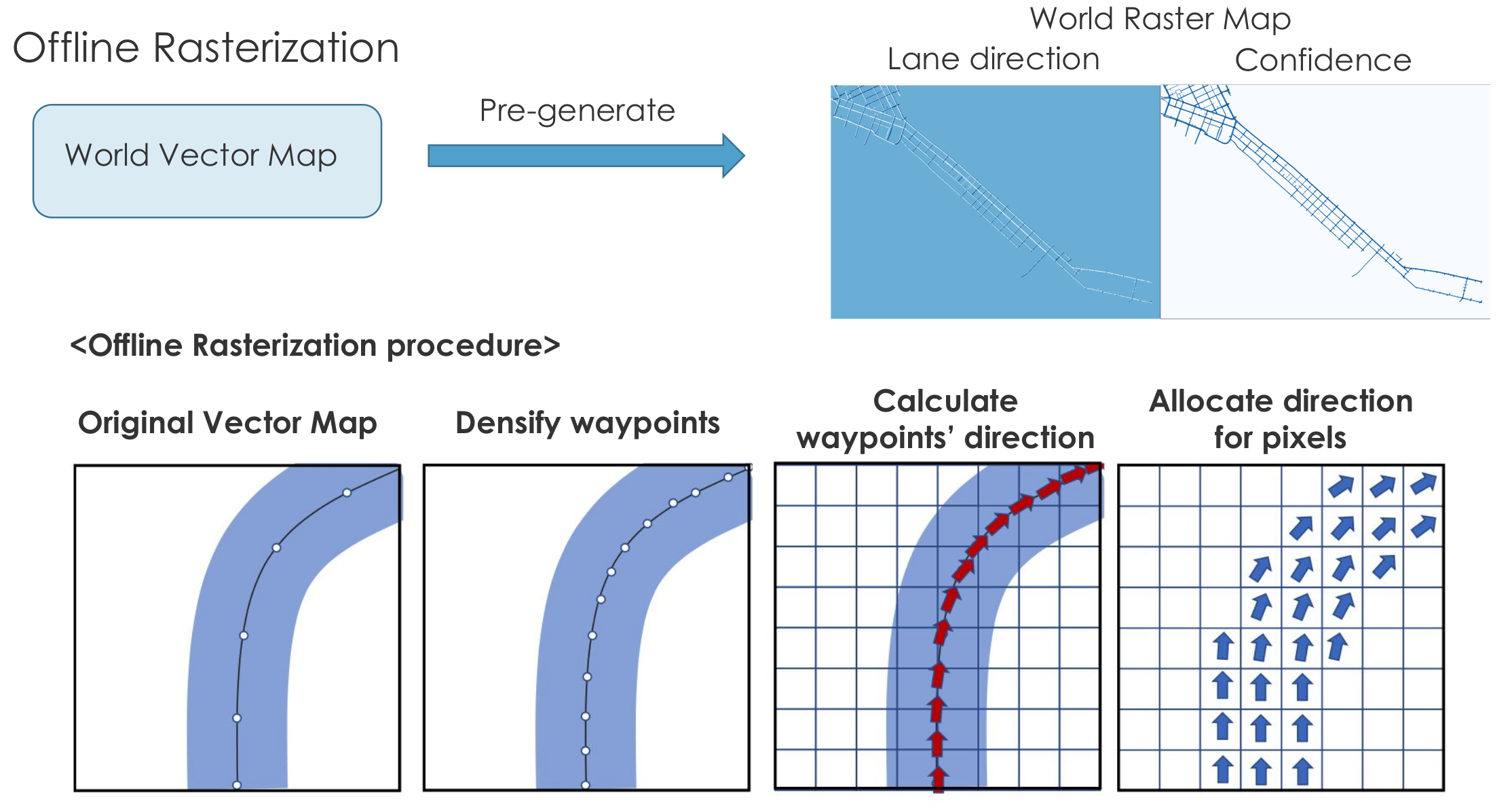

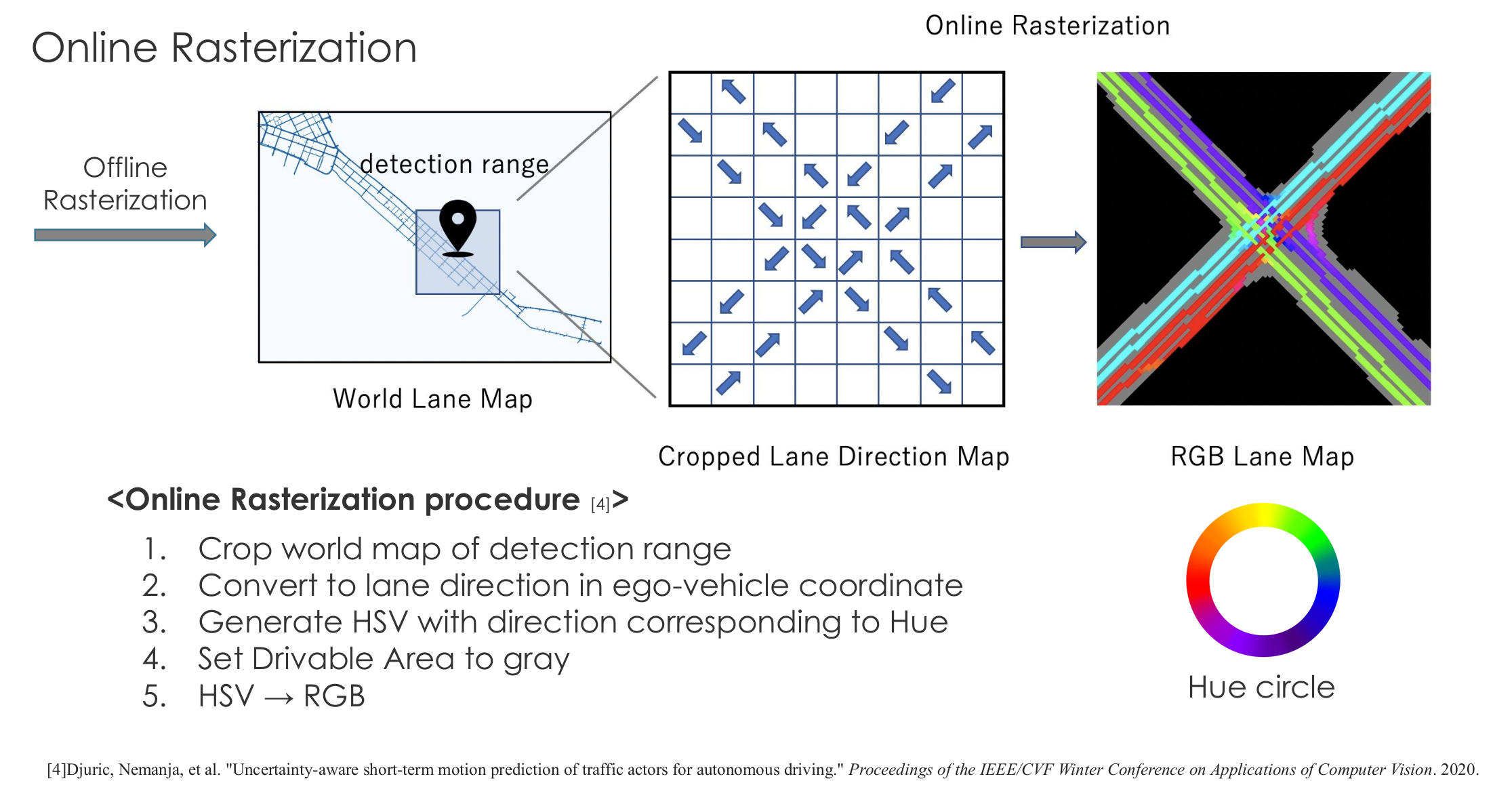

Through a offline rasterization and a online rasterization, LaneFusion overcomes the problem that the vector map format is difficult to input into current mainstream convolutional neural networks (CNNs).

experiments

We confirmed that the proposed method increased the 3D average precision (AP) and average orientation similarity (AOS) of the vehicle class by up to 6.56 and 10.65 points, respectively.

Reference

- Taisei Fujimoto, Satoshi Tanaka, and Shinpei Kato: LaneFusion: 3D Object Detection with Rasterized Lane Map, the 2022 33rd IEEE Intelligent Vehicles Symposium (IV 2022), Proceedings, pp. 396-403.