summary

Method

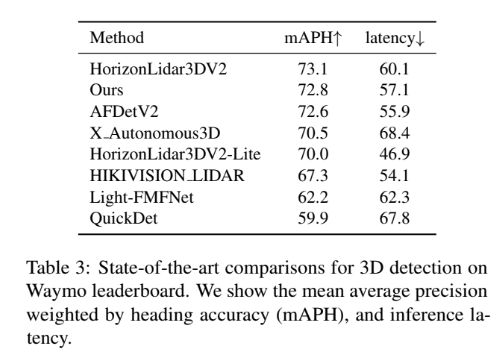

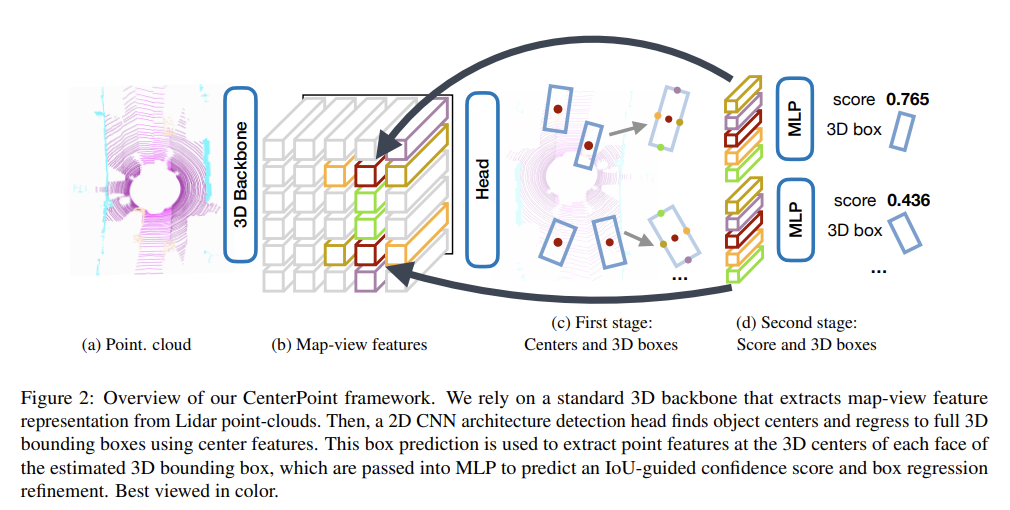

- 全体構成

- Backbone: pointcloud -> map-view features

- region proposal (Detection Head first stage): map-view features -> center position, 3d boxes

- fine detection (Detection Head second stage): proposal map-view features -> score, 3d boxes

Preprocess

- Densification

- v2で追加

- 点群をn frame利用する (nuscenesでn = 3)

- 各フレームに現在フレームからの時間差を特徴量として追加する

Backbone : pointcloud -> map-view features

- PointPillars-based encoder

- pointcloud -> stacked pillars

- 9-dimensional feature extractor that includes 3D location (3), intensity (1), offsets to 3D pillar center (3), and offsets to 2D grid center (2)

- learned features

- puesudo image (bird’s-eye-view 2D feature map)

- 工夫

- Dynamic Voxelization

- v2から

- MVFで提案、GPUで計算することで高速化

region proposal (Detection Head first stage): map-view features -> center position, 3d boxes

- bird’s-eye-view center estimation (Heatmap)

- 推定するもの

- offset (x, y, z)

- z

- 3D size (w, l, h)

- orientation (sin α, cos α)

- velocity estimation

- 工夫

- heatmapがスパース -> objectのground truthにガウシアンカーネルかけて学習する

- detection head: rotationも出力

- Circular NMS: 回転にも対応したNMSかつ早い

- Double-flip testing: 対称なheatmapを生成して学習

- IoU-Aware Confidence Rectification Module

- v2から

- CIASSDで用いられている手法

- one additional regression head to predict the

IoU between object detections and corresponding ground

truth boxes

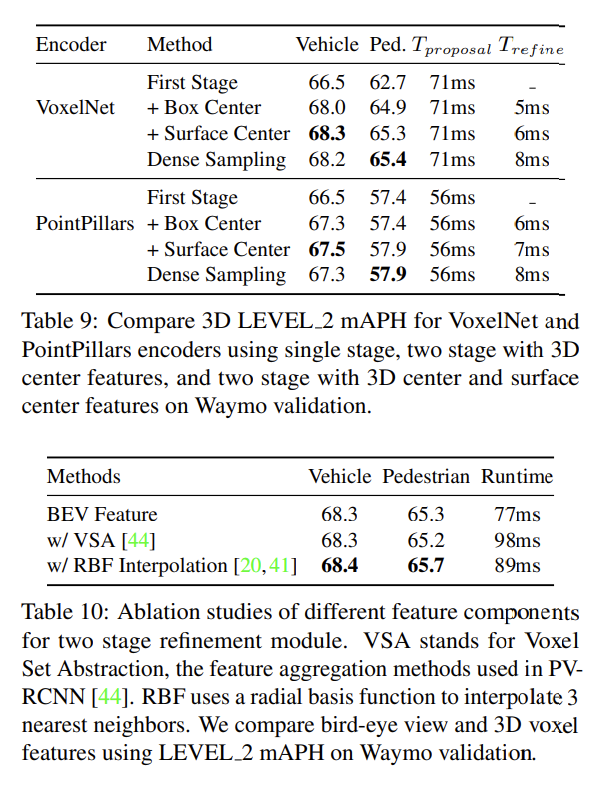

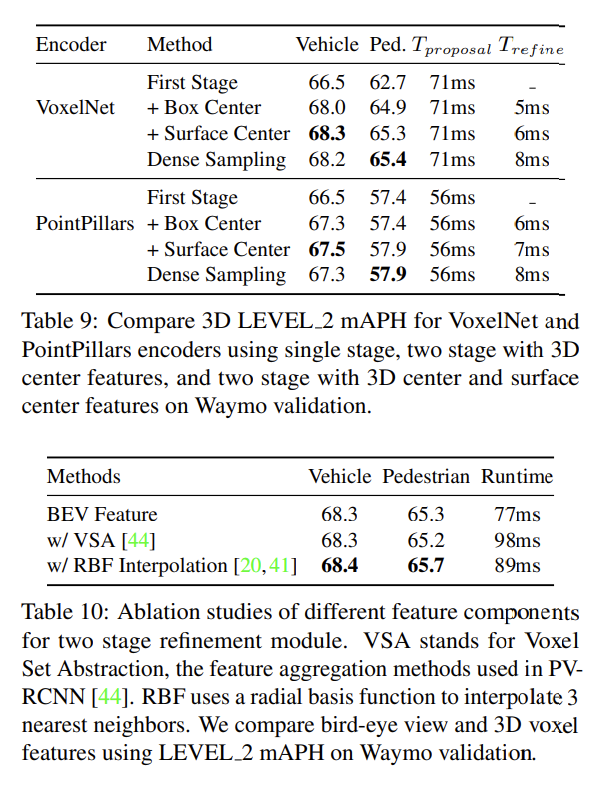

fine detection (Detection Head second stage): proposal map-view features -> score, 3d boxes

- 2 stage目はv2で追加

- 1st stageで得られたcenter pointからmap featureのROIを取ってきて、それを一列にしてMLPにかけている

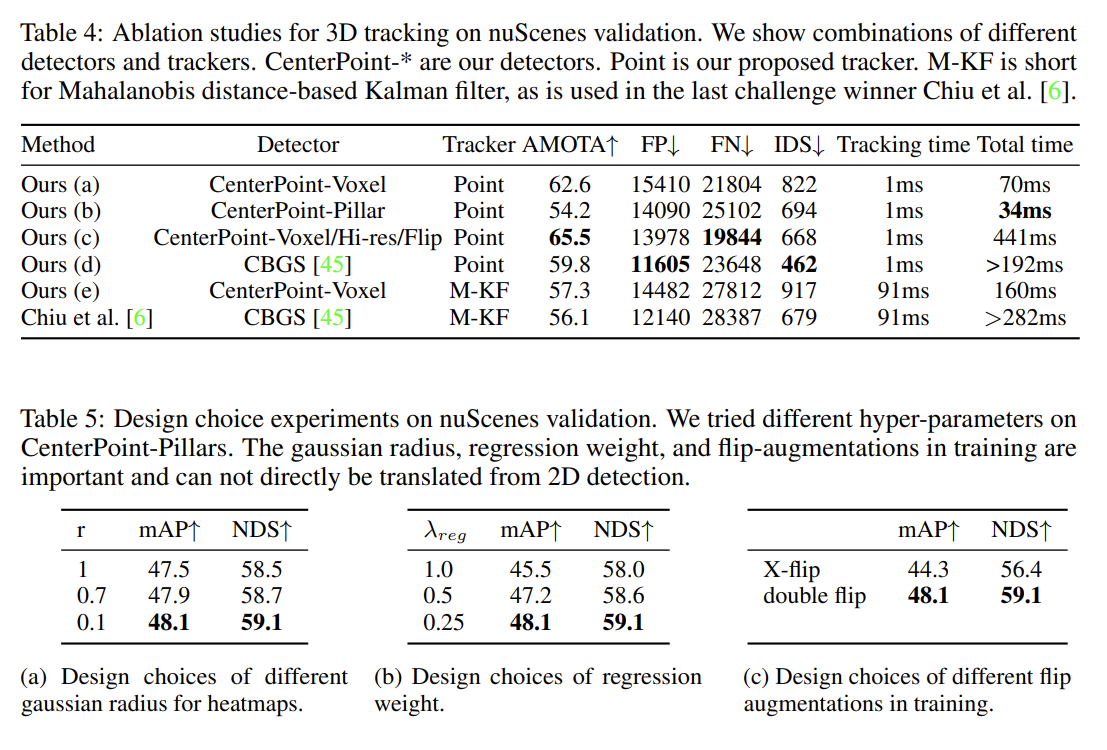

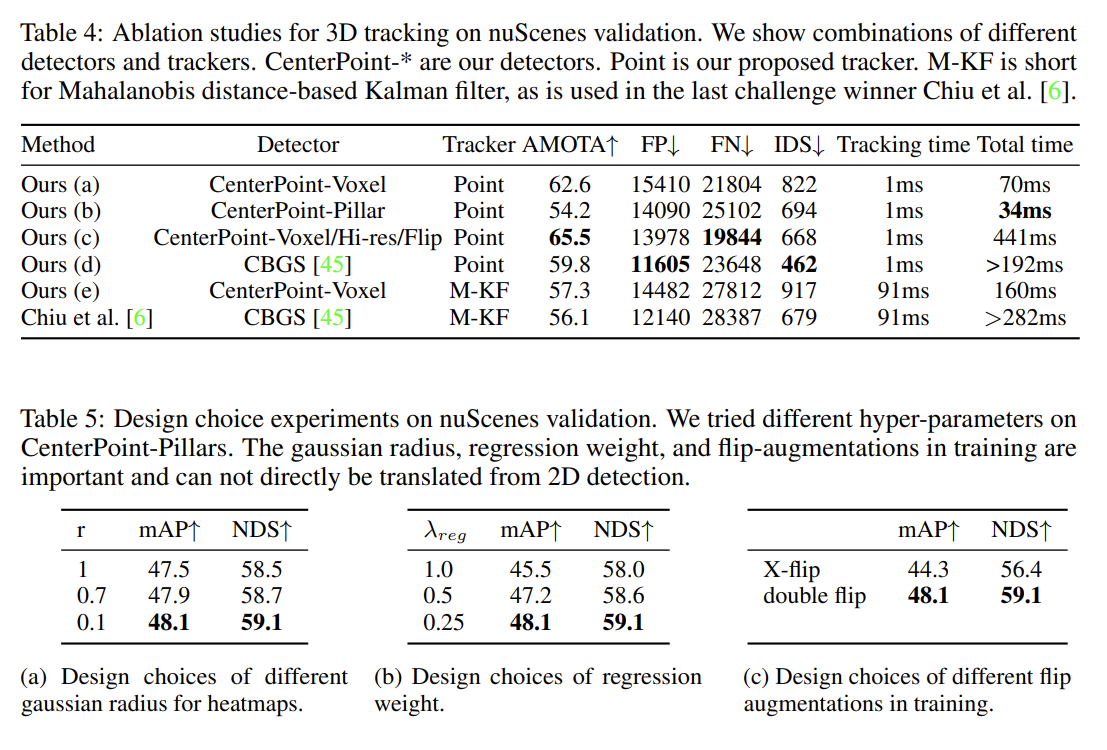

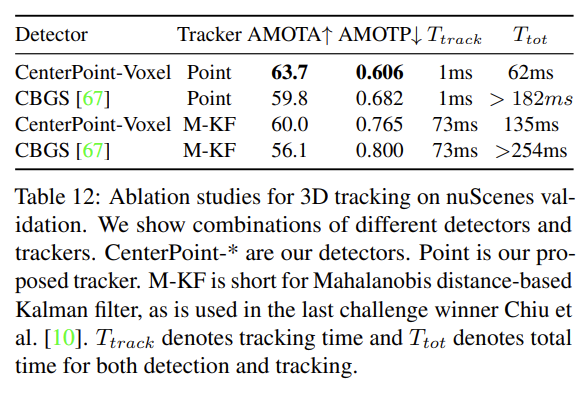

- Tracking

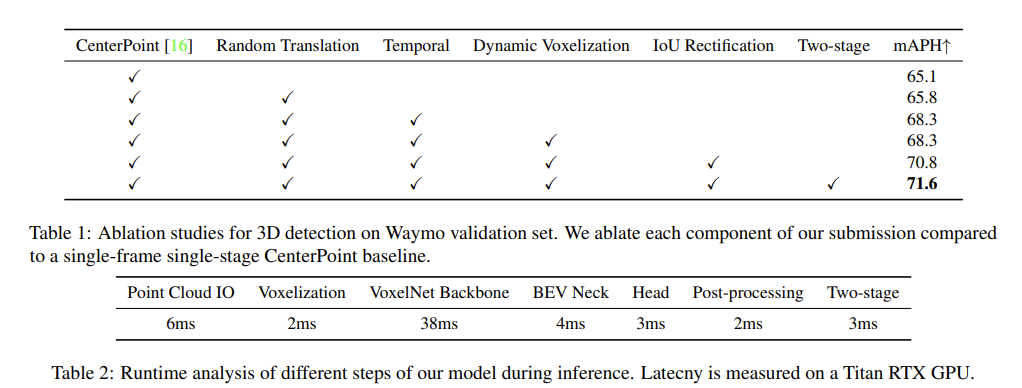

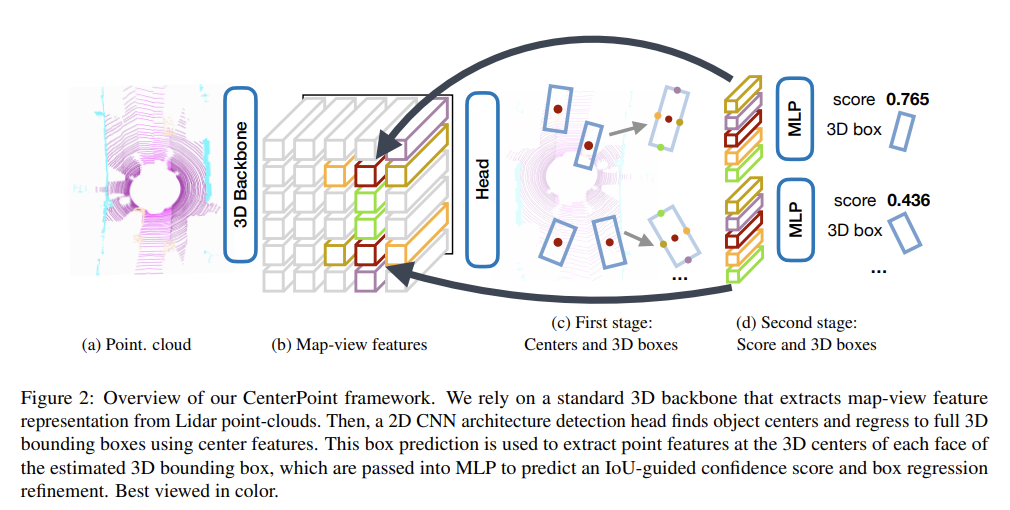

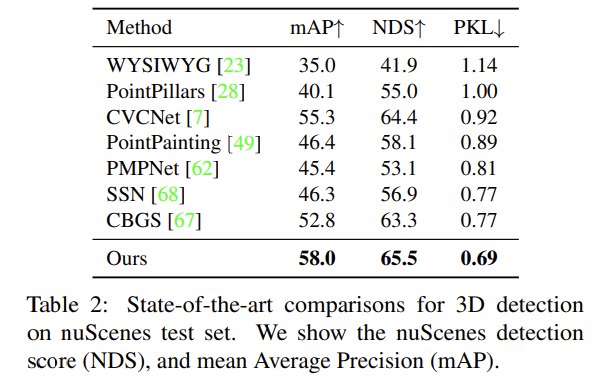

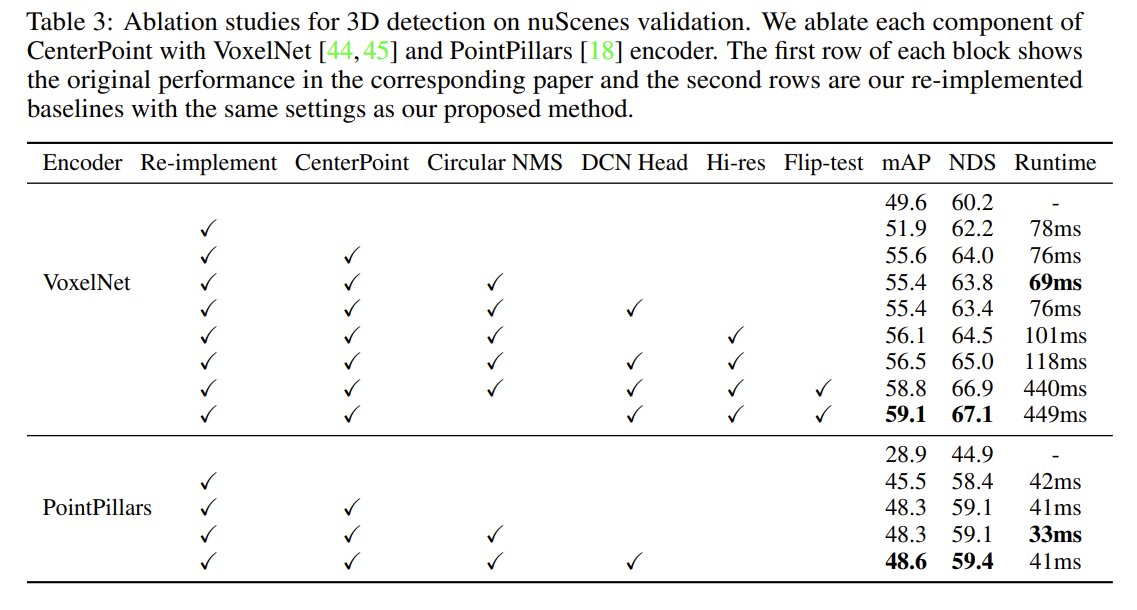

Experiment

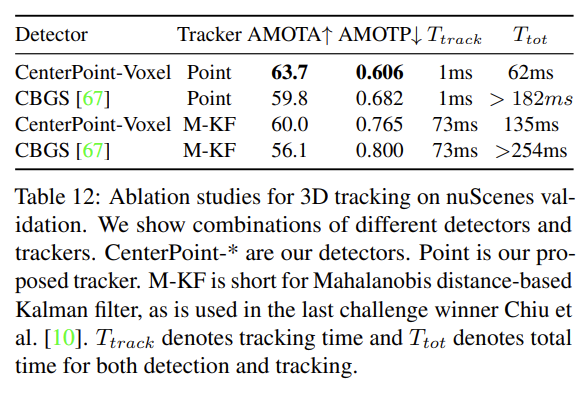

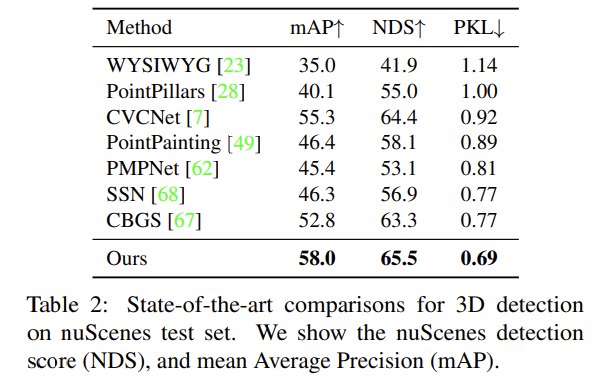

nuScenes

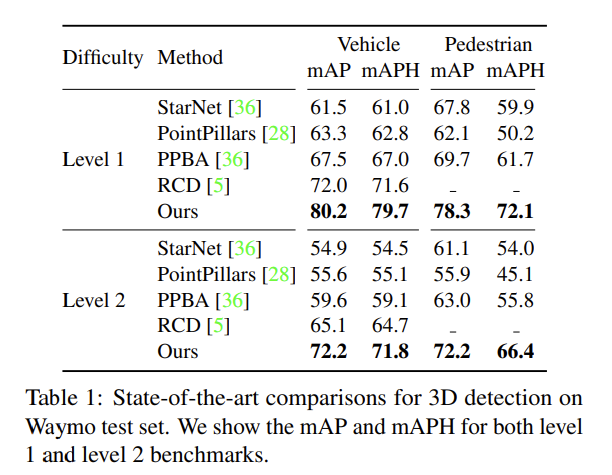

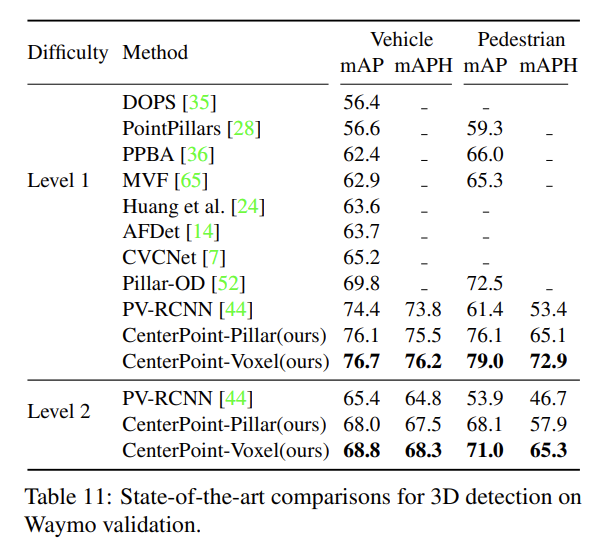

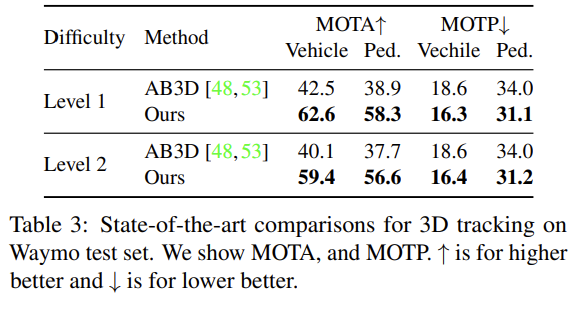

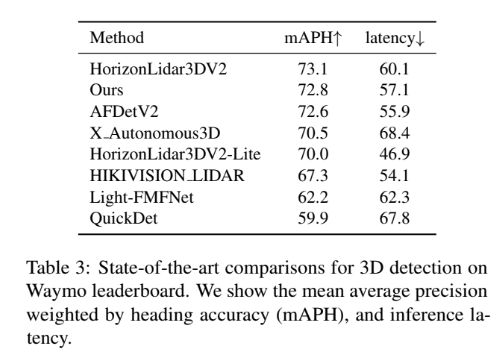

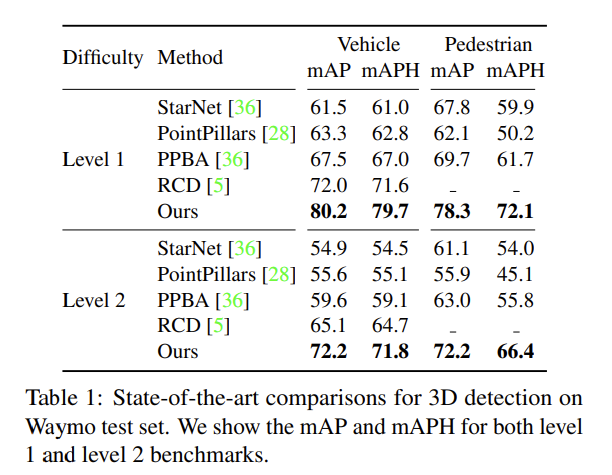

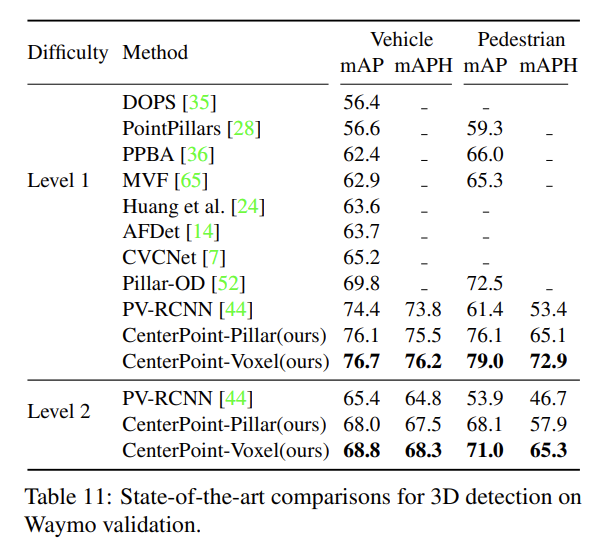

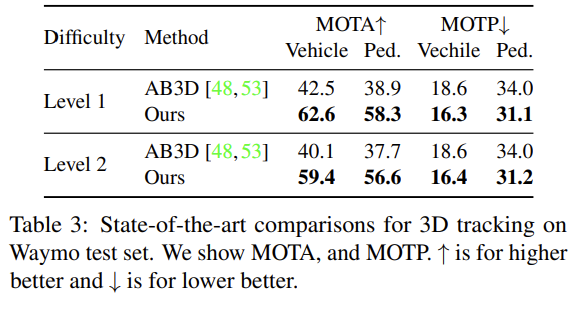

waymo

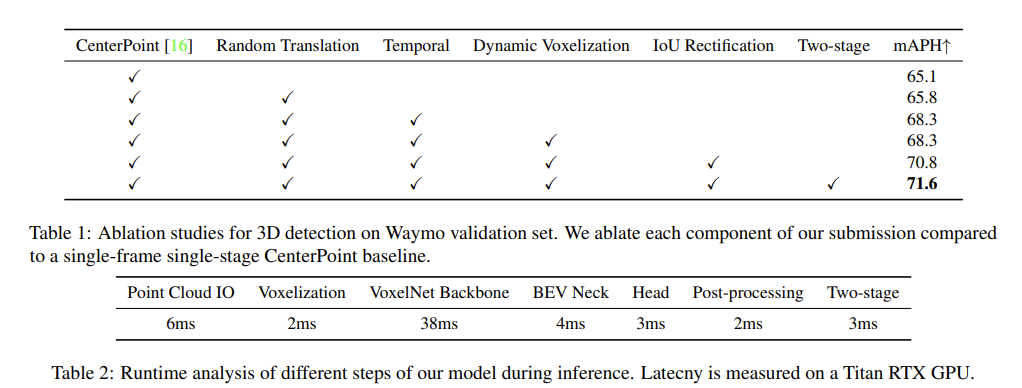

CenterPoint++ submission to the Waymo Real-time 3D Detection Challenge (CVPR 2021 workshop)

summary

- まとまっていて(ある程度知っているなら)分かりやすい

- The real time version of CenterPoint ranked 2nd in the Waymo Real-time 3D detection challenge (72.8 mAPH / 57.1 ms)

- Tips

- Temporal Multi-sweep Point Cloud Input

- Dynamic Voxelization

Method

- pointcloud: $ P = {(x, y, z, r)_i} $

- bounding box b = (u, v, d, w, l, h, α)

evaluation